[toc]

centos7搭建openstack Rocky版

rocky版中的密码说明

| 数据库密码(未使用变量) | 数据库的根密码 |

|---|---|

ADMIN_PASS | 用户密码 admin |

CINDER_DBPASS | 块存储服务的数据库密码 |

CINDER_PASS | 块存储服务用户密码 cinder |

DASH_DBPASS | 仪表板的数据库密码 |

DEMO_PASS | 用户密码 demo |

GLANCE_DBPASS | 镜像服务的数据库密码 |

GLANCE_PASS | 图片服务用户密码 glance |

KEYSTONE_DBPASS | 身份服务的数据库密码 |

METADATA_SECRET | 元数据代理的秘密 |

NEUTRON_DBPASS | 网络服务的数据库密码 |

NEUTRON_PASS | 网络服务用户密码 neutron |

NOVA_DBPASS | 计算服务的数据库密码 |

NOVA_PASS | 计算服务用户密码 nova |

PLACEMENT_PASS | 展示位置服务用户的密码 placement |

RABBIT_PASS | RabbitMQ用户密码 openstack |

实验环境

| 角色 | IP | 主机名 | 默认网关 | 硬件环境 | 虚拟化 | 防火墙、selinux | 操作系统 | 内核版本 |

|---|---|---|---|---|---|---|---|---|

| 控制节点 | 172.30.100.4/16 | openstack-controller | 172.30.255.253 | 4c16g,40g+100g | 开启 | 关闭 | CentOS7.6 | 3.10.0-957.21.3.el7.x86_64 |

| 计算节点1 | 172.30.100.5/16 | openstack-compute01 | 172.30.255.253 | 4c16g,40g+100g | 开启 | 关闭 | CentOS7.6 | 3.10.0-957.21.3.el7.x86_64 |

| 存储节点1 | 172.30.100.6/16 | openstack-block01 | 172.30.255.253 | 4c16g,40g+100g | 开启 | 关闭 | CentOS7.6 | 3.10.0-957.21.3.el7.x86_64 |

| 对象节点1 | 172.30.100.7/16 | openstack-object01 | 172.30.255.253 | 4c16g,40g+50g+50g | 开启 | 关闭 | CentOS7.6 | 3.10.0-957.21.3.el7.x86_64 |

| 对象节点2 | 172.30.100.8/16 | openstack-object02 | 172.30.255.253 | 4c16g,40g+50g+50g | 开启 | 关闭 | CentOS7.6 | 3.10.0-957.21.3.el7.x86_64 |

1.把rocky版rpm包做成本地yum源

rocky版离线包已上传至 百度网盘 提取码: 4gam

由于rocky官方yum源发生变更,在centos7上使用官方yum源安装会有部分包无法安装,因此采用离线包制作本地yum源方式安装

1.1 安装 createrepo 命令

控制节点操作

yum -y install createrepo

1.2 制作仓库

控制节点操作

createrepo openstack-rocky

1.3 安装nginx

控制节点操作

yum -y install nginx

systemctl enable nginx && systemctl start nginx

1.4 配置nginx

控制节点操作

# 编辑nginx配置文件,yum安装的nginx根目录是/usr/share/nginx/html,这里个人习惯选择启用一个虚拟主机,监听88端口

cat > /etc/nginx/conf.d/openstack-rocky.repo.conf <<EOF

server {

listen 88;

root /opt;

location /openstack-rocky {

autoindex on;

autoindex_exact_size off;

autoindex_localtime on;

}

}

EOF

1.5 编辑本地yum仓库文件

所有节点操作

# 指定repo文件,把提前准备好的离线包上传到/opt下,目录名称为openstack-rocky

cat >/etc/yum.repos.d/openstack-rocky.repo <<EOF

[openstack]

name=openstack

baseurl=http://172.30.100.4:88/openstack-rocky

enabled=1

gpgcheck=0

EOF

1.6 生成本地缓存

所有节点操作

yum makecache

2.基础环境配置

2.1 关闭防火墙和selinux

所有节点操作

# 关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

# 禁用selinux

// 临时修改

setenforce 0

// 永久修改,重启服务器后生效

sed -i '7s/enforcing/disabled/' /etc/selinux/config

2.2 配置hosts解析

所有节点操作

cat >> /etc/hosts << EOF

172.30.100.4 openstack-controller

172.30.100.5 openstack-compute01

172.30.100.6 openstack-block01

172.30.100.7 openstack-object01

172.30.100.8 openstack-object02

EOF

2.3 配置chrony服务,要保证所有节点时间一致

2.3.1 安装chrony

所有节点操作

yum -y install chrony

2.3.2 修改配置文件 /etc/chrony.conf

控制节点操作

sed -i.bak '3,6d' /etc/chrony.conf && \

sed -i '3cserver ntp1.aliyun.com iburst' /etc/chrony.conf && \

sed -i '23callow 172.30.0.0/16' /etc/chrony.conf

计算、存储、对象节点操作

sed -i '3,6d' /etc/chrony.conf && \

sed -i '3cserver openstack-controller iburst' /etc/chrony.conf

2.3.3 启动服务并设置开机自启

所有节点操作

systemctl enable chronyd && systemctl start chronyd

2.3.4 验证

控制节点操作

$ chronyc sources

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* 120.25.115.20 2 6 37 29 +43us[ -830us] +/- 22ms

计算、存储、对象节点操作

$ chronyc sources

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^? openstack-controller 3 6 200 50 +1319ms[+1319ms] +/- 14.4s

2.4 下载openstack官方yum源安装openstack客户端

所有节点操作

本文使用本地源安装,不需要安装 centos-release-openstack-rocky

# yum -y install centos-release-openstack-rocky

yum -y install python-openstackclient

到此,所有节点基础环境配置完成!!!

3.控制节点基础环境安装

3.1 安装配置mariadb数据库

3.1.1 安装包

yum -y install mariadb mariadb-server python2-PyMySQL

3.1.2 编辑配置文件

cat > /etc/my.cnf.d/openstack.cnf <<EOF

[mysqld]

bind-address = 172.30.100.4

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

EOF

3.1.3 启动mariadb并设置开机自启

systemctl enable mariadb && systemctl start mariadb

3.1.4 进行数据库安全设置

$ mysql_secure_installation

Enter current password for root (enter for none): # 没有密码,直接回车

Set root password? [Y/n] n # 不设置root密码

Remove anonymous users? [Y/n] y # 移除匿名用户

Disallow root login remotely? [Y/n] y # 禁止root远程登陆

Remove test database and access to it? [Y/n] y # 移除test数据库

Reload privilege tables now? [Y/n] y # 刷新权限表

3.2 安装消息队列rabbitmq

OpenStack 使用 message queue 协调操作和各服务的状态信息。消息队列服务一般运行在控制节点上

rabbitmq会启动2个端口

tcp/5672 rabbitmq服务端口

tcp/25672 多个rabbitmq通信用到的端口

3.2.1 安装包

yum -y install rabbitmq-server

3.2.2 启动rabbitmq并设置为开机自启

systemctl enable rabbitmq-server && systemctl start rabbitmq-server

3.2.3 添加openstack用户

$ rabbitmqctl add_user openstack RABBIT_PASS

Creating user "openstack"

3.2.4 给openstack用户设置读和写权限

*3个.分别是 可读、可写、可配置

$ rabbitmqctl set_permissions openstack ".*" ".*" ".*"

Setting permissions for user "openstack" in vhost "/"

3.2.5 启动rabbitmq一个插件,启动之后会监听tcp/15672

是一个web管理界面,默认用户名和密码都是

guest

$ rabbitmq-plugins enable rabbitmq_management

The following plugins have been enabled:

amqp_client

cowlib

cowboy

rabbitmq_web_dispatch

rabbitmq_management_agent

rabbitmq_management

Applying plugin configuration to rabbit@openstack-controller... started 6 plugins.

3.3 安装memcached

认证服务认证缓存使用Memcached缓存令牌。缓存服务memecached运行在控制节点。在生产部署中,我们推荐联合启用防火墙、认证和加密保证它的安全。

memcache监听 tcp/udp 11211端口

3.3.1 安装包

yum -y install memcached python-memcached

3.3.2 修改配置文件

配置服务以使用控制器节点的管理IP地址。这是为了允许其他节点通过管理网络进行访问:

sed -i.bak '/OPTIONS/c OPTIONS="-l 127.0.0.1,::1,openstack-controller"' /etc/sysconfig/memcached

修改后文件内容如下

$ cat /etc/sysconfig/memcached

PORT="11211"

USER="memcached"

MAXCONN="1024"

CACHESIZE="64"

OPTIONS="-l 127.0.0.1,::1,openstack-controller"

3.3.3 启动memcached并设置为开机自启

systemctl enable memcached && systemctl start memcached

3.4 安装etcd

OpenStack服务可以使用Etcd(分布式可靠键值存储)来进行分布式键锁定,存储配置,跟踪服务活动性和其他情况。

etcd服务在控制器节点上运行。

etcd服务启动后提供给外部客户端通信的端口是2379,而etcd服务中成员间的通信端口是2380

3.4.1 安装包

yum -y install etcd

3.4.2 编辑配置文件

编辑/etc/etcd/etcd.conf文件,并设置ETCD_INITIAL_CLUSTER, ETCD_INITIAL_ADVERTISE_PEER_URLS,ETCD_ADVERTISE_CLIENT_URLS, ETCD_LISTEN_CLIENT_URLS控制器节点,以使经由管理网络通过其他节点的访问的管理IP地址:

export CONTROLLER_IP=172.30.100.4

cat > /etc/etcd/etcd.conf << EOF

# [Member]

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="http://${CONTROLLER_IP}:2380"

ETCD_LISTEN_CLIENT_URLS="http://${CONTROLLER_IP}:2379"

ETCD_NAME="openstack-controller"

# [Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://${CONTROLLER_IP}:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://${CONTROLLER_IP}:2379"

ETCD_INITIAL_CLUSTER="openstack-controller=http://${CONTROLLER_IP}:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

3.4.3 启动etcd并设置开机自启

systemctl enable etcd && systemctl restart etcd

到此,控制节点环境安装完成!!!

4.控制节点认证服务keystone安装

keystone认证服务功能:认证管理、授权管理、服务目录

认证:用户名和密码

授权:授权管理,例如一些技术网站(掘金、csdn)可以授权微信、QQ登陆

服务目录:相当于通讯录,即要访问openstack的镜像、网络、存储等服务,只需要找到keystone即可,而不需要再单独记住各个服务的访问地址

后续每安装一个服务都需要在keystone上注册

4.1 创建keystone数据库并授权

mysql -e "CREATE DATABASE keystone;"

mysql -e "GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \

IDENTIFIED BY 'KEYSTONE_DBPASS';"

mysql -e "GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \

IDENTIFIED BY 'KEYSTONE_DBPASS';"

4.2 安装和配置keystron

-

keystone借助apache访问

-

mod_wsgi是帮助apache连接python程序

-

监听端口 5000

4.2.1 安装软件包

yum -y install openstack-keystone httpd mod_wsgi openstack-utils.noarch

4.2.2 修改配置文件 /etc/keystone/keystone.conf

# 在 [database] 部分,配置数据库访问:

[database]

connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone

# 在[token]部分,配置Fernet UUID令牌的提供者

[token]

provider = fernet

使用如下命令修改

\cp /etc/keystone/keystone.conf{,.bak}

grep -Ev '^$|#' /etc/keystone/keystone.conf.bak >/etc/keystone/keystone.conf

openstack-config --set /etc/keystone/keystone.conf database connection mysql+pymysql://keystone:KEYSTONE_DBPASS@openstack-controller/keystone

openstack-config --set /etc/keystone/keystone.conf token provider fernet

文件md5值

md5sum /etc/keystone/keystone.conf

e12c017255f580f414e3693bd4ccaa1a /etc/keystone/keystone.conf

4.2.3 初始化身份认证服务的数据库

命令的含义是切换到keystone用户,使用的shell是/bin/sh,执行 -c后的命令

su -s /bin/sh -c "keystone-manage db_sync" keystone

上一步操作为导入表,以下命令执行返回有表即为正确

$ mysql keystone -e "show tables;"|wc -l

45

4.2.4 初始化Fernet key

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

4.2.5 引导身份服务

keystone-manage bootstrap --bootstrap-password ADMIN_PASS \

--bootstrap-admin-url http://openstack-controller:5000/v3/ \

--bootstrap-internal-url http://openstack-controller:5000/v3/ \

--bootstrap-public-url http://openstack-controller:5000/v3/ \

--bootstrap-region-id RegionOne

4.2.6 配置Apache服务器

编辑/etc/httpd/conf/httpd.conf文件,配置ServerName 选项为控制节点

4.2.6.1 修改文件

\cp /etc/httpd/conf/httpd.conf{,.bak}

sed -i.bak -e '96cServerName openstack-controller' -e '/^Listen/c Listen 8080' /etc/httpd/conf/httpd.conf

文件md5值

$ md5sum /etc/httpd/conf/httpd.conf

812165839ec4f2e87a31c1ff2ba423aa /etc/httpd/conf/httpd.conf

4.2.6.2 创建文件软连接

ln -sf /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

4.2.7 启动Apache并设置为开机自启

systemctl enable httpd && systemctl start httpd

4.2.8 配置管理账户

以下为创建管理员账户admin,密码为ADMIN_PASS

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://openstack-controller:5000/v3

export OS_IDENTITY_API_VERSION=3

4.3 创建域、项目、用户和角色

4.3.1 创建一个域

$ openstack domain create --description "An Example Domain" example

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | An Example Domain |

| enabled | True |

| id | ab6f853144384043a5dd648c154d0efe |

| name | example |

| tags | [] |

+-------------+----------------------------------+

4.3.2 创建一个服务项目

# service,后期用于关联openstack系统用户glance、nova、neutron

$ openstack project create --domain default \

--description "Service Project" service

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Service Project |

| domain_id | default |

| enabled | True |

| id | f6696bc9511043ae9ec72d1c31a494f3 |

| is_domain | False |

| name | service |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

4.3.3 常规(非管理员)任务应使用无特权的项目和用户

例如,本指南创建myproject项目和myuser 用户

4.3.3.1 创建myproject项目

$ openstack project create --domain default \

--description "Demo Project" myproject

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Demo Project |

| domain_id | default |

| enabled | True |

| id | 5b9ccd294c364cc68747df85f9598c89 |

| is_domain | False |

| name | myproject |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

4.3.3.2 创建myuser用户

密码设置为

MYUSER_PASS

交互式与非交互式设置密码选择其中一种

非交互式设置密码

$ openstack user create --domain default \

--password MYUSER_PASS myuser

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | f7985ae93ad24f7784a5ea3e1f22109a |

| name | myuser |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

交互式设置密码

openstack user create --domain default \

--password-prompt myuser

4.3.3.3 创建myrole角色

$ openstack role create myrole

+-----------+----------------------------------+

| Field | Value |

+-----------+----------------------------------+

| domain_id | None |

| id | 9cb289f07a6d4bd6898dd863d616b164 |

| name | myrole |

+-----------+----------------------------------+

4.3.3.4 将myrole角色添加到myproject项目和myuser用户

openstack role add --project myproject --user myuser myrole

4.3.4 验证

4.3.4.1 取消设置临时变量 OS_AUTH_URL 和 OS_PASSWORD 环境变量

unset OS_AUTH_URL OS_PASSWORD

4.3.4.2 以admin用户身份请求身份验证令牌

密码是

ADMIN_PASS

$ openstack --os-auth-url http://openstack-controller:5000/v3 \

--os-project-domain-name Default --os-user-domain-name Default \

--os-project-name admin --os-username admin token issue

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2021-11-04T03:32:38+0000 |

| id | gAAAAABhg0ZG2yKJ00Myq8FQ33ibR6tN3I1Fu2xXJRN17usVIVPHiVJ2eJYQviKz9HeKWKEjmH_MLaWeiDZcW3QBQGjnT_Mbe9EEKqHSXKBJxo2etnI_kPCvxRoLPGE-XbevIWW6DYmsJqCJr32TdUG5wysC12ZbSWyVp25qyX_BKl_8KGSXXyM |

| project_id | a6c250532966417cae11b1dfb5f0f6cc |

| user_id | 20b791e627a741ed8b21e41027638986 |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

4.3.4.3 使用上一节中创建的用户myuser,请请求认证令牌

密码是

MYUSER_PASS

$ openstack --os-auth-url http://openstack-controller:5000/v3 \

--os-project-domain-name Default --os-user-domain-name Default \

--os-project-name myproject --os-username myuser token issue

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2021-11-04T03:35:19+0000 |

| id | gAAAAABhg0bnYFRoP2qjzgTRmT7lojzV3WO9GkYv6qFu5Nhx9_WbhIV6EDfNBbuJa7EHjmfz5BvYAza9J6wC6ZF36_nHfVPVkq3xO4E7fHNTa914q79UKTkpikR2i5NfPNgo1FqeIa0snUQ2M2-JSqteLCZxLMYZRTa_ckdV12i9OTle5_-6wk8 |

| project_id | a150277718cd41439adbf88bbac6d1fe |

| user_id | d7d0fdf398d949038719c5f0c22fc379 |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

4.4 创建OpenStack客户端环境脚本

4.4.1 创建脚本

创建和编辑

admin-openrc文件并添加以下内容,这里放在/opt下

cat > /opt/admin-openrc <<EOF

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_AUTH_URL=http://openstack-controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

创建和编辑

demo-openrc文件并添加以下内容

cat > /opt/demo-openrc <<EOF

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=myproject

export OS_USERNAME=myuser

export OS_PASSWORD=MYUSER_PASS

export OS_AUTH_URL=http://openstack-controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

4.4.2 使用脚本

4.4.2.1 加载 admin-openrc 文件以使用身份服务的位置以及admin项目和用户凭据填充环境变量

source /opt/admin-openrc

4.4.2.2 请求身份验证令牌

注意expires中是UTC时间,落后中国8个小时,我国是东八区,使用 timedatectl 查看时间及时区,默认过期时间1小时

$ openstack token issue

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2021-11-04T03:47:51+0000 |

| id | gAAAAABhg0nXv43sRRJ5ahS0P2z86nPoZGz7g-Y2v3jcLhW-QM5eTIj_39ncEktjGu1R1SAOM9cqMpmOHF26j8ur7L26fYJ8gyNoA-JC51ZWesc5mnr1FapD0dxqCmteL22RmA5gRtzjC5qHfbn_RjVNe-AjBNSL_OmtAEdr-kY5B2IO7kvt7ko |

| project_id | a6c250532966417cae11b1dfb5f0f6cc |

| user_id | 20b791e627a741ed8b21e41027638986 |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

到此,控制节点认证服务keystone安装完成!!!

5.控制节点镜像服务glance安装

OpenStack镜像服务包括以下组件:

glance-api 接收镜像API的调用,诸如镜像发现、恢复、存储

glance-registry 存储、处理和恢复镜像的元数据(属性),元数据包括项诸如大小和类型

glance服务监听两个端口

glance-api 9292

glance-registry 9191

5.1 创建glance数据库并授权

mysql -e "CREATE DATABASE glance;"

mysql -e "GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \

IDENTIFIED BY 'GLANCE_DBPASS';"

mysql -e "GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \

IDENTIFIED BY 'GLANCE_DBPASS';"

5.2 获取管理员凭据以获取对仅管理员CLI命令的访问

source /opt/admin-openrc

5.3 创建服务凭据

5.3.1 创建 glance 用户

密码设置为

GLANCE_PASS

交互式与非交互式设置密码选择其中一种

非交互式设置密码

$ openstack user create --domain default --password GLANCE_PASS glance

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 36a88bb288464126837ebc19758bead6 |

| name | glance |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

交互式设置密码

openstack user create --domain default --password-prompt glance

5.3.2 将 admin 角色添加到 glance 用户和 service 项目

openstack role add --project service --user glance admin

5.3.3 创建glance服务实体

$ openstack service create --name glance \

--description "OpenStack Image" image

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Image |

| enabled | True |

| id | ce5a424428d640c9adec06865d211916 |

| name | glance |

| type | image |

+-------------+----------------------------------+

5.3.4 创建Image服务API端点

$ openstack endpoint create --region RegionOne \

image public http://openstack-controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | bed29b8924114eee8b427f7a83f2cd64 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | ce5a424428d640c9adec06865d211916 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

$ openstack endpoint create --region RegionOne \

image internal http://openstack-controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 94f84d946e6f4463af82041caf2877b5 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | ce5a424428d640c9adec06865d211916 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

$ openstack endpoint create --region RegionOne \

image admin http://openstack-controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 16e947838d7948e6a0ec7feb7910b415 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | ce5a424428d640c9adec06865d211916 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

删除API端点使用命令openstack endpoint delete <endpoint-id>

使用命令openstack endpoint list查看endpoint-id然后根据id删除

5.4 安装和配置组件

5.4.1 安装软件包

yum -y install openstack-glance

5.4.2 编辑 /etc/glance/glance-api.conf 文件并完成以下操作

1.在该[database]部分中,配置数据库访问

[database]

# ...

connection = mysql+pymysql://glance:GLANCE_DBPASS@openstack-controller/glance

2.在[keystone_authtoken]和[paste_deploy]部分中,配置身份服务访问

[keystone_authtoken]

# ...

www_authenticate_uri = http://openstack-controller:5000

auth_url = http://openstack-controller:5000

memcached_servers = openstack-controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = GLANCE_PASS

[paste_deploy]

# ...

flavor = keystone

3.在该[glance_store]部分中,配置本地文件系统存储和图像文件的位置

[glance_store]

# ...

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

用以下命令修改

\cp /etc/glance/glance-api.conf{,.bak}

grep '^[a-Z\[]' /etc/glance/glance-api.conf.bak >/etc/glance/glance-api.conf

openstack-config --set /etc/glance/glance-api.conf database connection mysql+pymysql://glance:GLANCE_DBPASS@openstack-controller/glance

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken www_authenticate_uri http://openstack-controller:5000

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_url http://openstack-controller:5000

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken memcached_servers openstack-controller:11211

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_type password

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_name service

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken username glance

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken password GLANCE_PASS

openstack-config --set /etc/glance/glance-api.conf paste_deploy flavor keystone

openstack-config --set /etc/glance/glance-api.conf glance_store stores file,http

openstack-config --set /etc/glance/glance-api.conf glance_store default_store file

openstack-config --set /etc/glance/glance-api.conf glance_store filesystem_store_datadir /var/lib/glance/images/

文件md5值

$ md5sum /etc/glance/glance-api.conf

768bce1167f1545fb55115ad7e4fe3ff /etc/glance/glance-api.conf

5.4.3 编辑 /etc/glance/glance-registry.conf 文件并完成以下操作

1.在该[database]部分中,配置数据库访问

[database]

# ...

connection = mysql+pymysql://glance:GLANCE_DBPASS@openstack-controller/glance

2.在[keystone_authtoken]和[paste_deploy]部分中,配置身份服务访问

[keystone_authtoken]

# ...

www_authenticate_uri = http://openstack-controller:5000

auth_url = http://openstack-controller:5000

memcached_servers = openstack-controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = GLANCE_PASS

[paste_deploy]

# ...

flavor = keystone

用以下命令修改

\cp /etc/glance/glance-registry.conf{,.bak}

grep '^[a-Z\[]' /etc/glance/glance-registry.conf.bak > /etc/glance/glance-registry.conf

openstack-config --set /etc/glance/glance-registry.conf database connection mysql+pymysql://glance:GLANCE_DBPASS@openstack-controller/glance

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken www_authenticate_uri http://openstack-controller:5000

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_url http://openstack-controller:5000

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken memcached_servers openstack-controller:11211

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_type password

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_name service

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken username glance

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken password GLANCE_PASS

openstack-config --set /etc/glance/glance-registry.conf paste_deploy flavor keystone

文件md5值

$ md5sum /etc/glance/glance-registry.conf

ca0383d969bf7d1e9125b836769c9a2e /etc/glance/glance-registry.conf

5.4.4 同步数据库

忽略此输出中的任何弃用消息

$ su -s /bin/sh -c "glance-manage db_sync" glance

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:1352: OsloDBDeprecationWarning: EngineFacade is deprecated; please use oslo_db.sqlalchemy.enginefacade

expire_on_commit=expire_on_commit, _conf=conf)

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Running upgrade -> liberty, liberty initial

INFO [alembic.runtime.migration] Running upgrade liberty -> mitaka01, add index on created_at and updated_at columns of 'images' table

INFO [alembic.runtime.migration] Running upgrade mitaka01 -> mitaka02, update metadef os_nova_server

INFO [alembic.runtime.migration] Running upgrade mitaka02 -> ocata_expand01, add visibility to images

INFO [alembic.runtime.migration] Running upgrade ocata_expand01 -> pike_expand01, empty expand for symmetry with pike_contract01

INFO [alembic.runtime.migration] Running upgrade pike_expand01 -> queens_expand01

INFO [alembic.runtime.migration] Running upgrade queens_expand01 -> rocky_expand01, add os_hidden column to images table

INFO [alembic.runtime.migration] Running upgrade rocky_expand01 -> rocky_expand02, add os_hash_algo and os_hash_value columns to images table

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Upgraded database to: rocky_expand02, current revision(s): rocky_expand02

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Database migration is up to date. No migration needed.

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Running upgrade mitaka02 -> ocata_contract01, remove is_public from images

INFO [alembic.runtime.migration] Running upgrade ocata_contract01 -> pike_contract01, drop glare artifacts tables

INFO [alembic.runtime.migration] Running upgrade pike_contract01 -> queens_contract01

INFO [alembic.runtime.migration] Running upgrade queens_contract01 -> rocky_contract01

INFO [alembic.runtime.migration] Running upgrade rocky_contract01 -> rocky_contract02

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Upgraded database to: rocky_contract02, current revision(s): rocky_contract02

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Database is synced successfully.

有表即为正确

$ mysql glance -e "show tables;" | wc -l

16

5.4.5 启动glance服务并设置为开机自启

systemctl enable openstack-glance-api openstack-glance-registry

systemctl start openstack-glance-api openstack-glance-registry

5.4.6 验证操作

5.4.6.1 获取管理员凭据以获取对仅管理员CLI命令的访问权限

source /opt/admin-openrc

5.4.6.2 下载测试镜像

wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img

5.4.6.3 上传镜像

使用QCOW2磁盘格式,裸容器格式和公共可见性将映像上载到映像服务 ,以便所有项目都可以访问它

删除镜像使用命令

glance image-delete 镜像id

这一步一定要看执行后输出结果中size大小,如果为0则说明镜像上载有问题

$ openstack image create "cirros" \

--file cirros-0.4.0-x86_64-disk.img \

--disk-format qcow2 --container-format bare \

--public

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| checksum | 443b7623e27ecf03dc9e01ee93f67afe |

| container_format | bare |

| created_at | 2021-11-04T03:56:55Z |

| disk_format | qcow2 |

| file | /v2/images/ff6ea9e3-e409-41e1-a871-daf3f8ebfb9e/file |

| id | ff6ea9e3-e409-41e1-a871-daf3f8ebfb9e |

| min_disk | 0 |

| min_ram | 0 |

| name | cirros |

| owner | a6c250532966417cae11b1dfb5f0f6cc |

| properties | os_hash_algo='sha512', os_hash_value='6513f21e44aa3da349f248188a44bc304a3653a04122d8fb4535423c8e1d14cd6a153f735bb0982e2161b5b5186106570c17a9e58b64dd39390617cd5a350f78', os_hidden='False' |

| protected | False |

| schema | /v2/schemas/image |

| size | 12716032 |

| status | active |

| tags | |

| updated_at | 2021-11-04T03:56:56Z |

| virtual_size | None |

| visibility | public |

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

5.4.7 确认上传图像并验证属性

$ openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| 94c96aab-d0b3-4340-835c-9a97108d0554 | cirros | active |

+--------------------------------------+--------+--------+

到此,控制节点镜像服务glance安装完成!!!

6.控制节点和计算节点计算服务nova安装

nova相关服务

| 服务名称 | 作用 |

|---|---|

| nova-api | 接受并响应最终用户的计算API调用。该服务支持OpenStack Compute API。它执行一些策略并启动大多数编排活动,例如运行实例 |

| nova-api-metadata | 接受来自实例的元数据请求。nova-api-metadata当您在nova-network 安装时以多主机模式运行时,通常会使用该服务 |

| nova-compute | 通过守护程序API创建和终止虚拟机实例的辅助程序守护程序 |

| nova-placement-api | 跟踪每个提供商的库存和使用情况 |

| nova-scheduler | 从队列中获取虚拟机实例请求,并确定它在哪台计算服务器主机上运行 |

| nova-conductor | 调解nova计算服务和数据库之间的交互。它消除了nova计算服务对云数据库的直接访问。nova导体模块水平伸缩。但是,不要在nova计算服务运行的节点上部署它 |

| nova-consoleauth | 为控制台代理提供的用户授权令牌。请参阅 nova-novncproxy和nova-xvpvncproxy。该服务必须正在运行,控制台代理才能起作用。您可以在集群配置中针对单个nova-consoleauth服务运行这两种类型的代理 rocky版不推荐使用,并且以后会删除 |

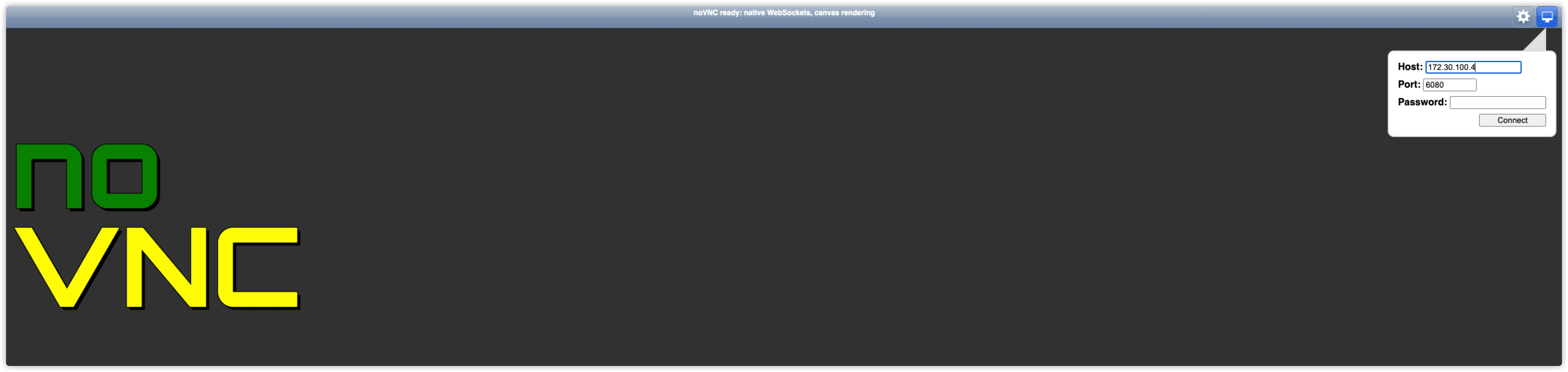

| nova-novncproxy | 提供用于通过VNC连接访问正在运行的实例的代理。支持基于浏览器的novnc客户端。 |

| nova-spicehtml5proxy | 提供用于通过SPICE连接访问正在运行的实例的代理。支持基于浏览器的HTML5客户端。 |

| nova-xvpvncproxy | 提供用于通过VNC连接访问正在运行的实例的代理。支持特定于OpenStack的Java客户端。 |

安装和配置控制节点

6.1 创建nova、nova_api、nova_cell0、placement数据库并授权

mysql -e "CREATE DATABASE nova_api;"

mysql -e "CREATE DATABASE nova;"

mysql -e "CREATE DATABASE nova_cell0;"

mysql -e "CREATE DATABASE placement;"

mysql -e "GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' \

IDENTIFIED BY 'NOVA_DBPASS';"

mysql -e "GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' \

IDENTIFIED BY 'NOVA_DBPASS';"

mysql -e "GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' \

IDENTIFIED BY 'NOVA_DBPASS';"

mysql -e "GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' \

IDENTIFIED BY 'NOVA_DBPASS';"

mysql -e "GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' \

IDENTIFIED BY 'NOVA_DBPASS';"

mysql -e "GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' \

IDENTIFIED BY 'NOVA_DBPASS';"

mysql -e "GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' \

IDENTIFIED BY 'PLACEMENT_DBPASS';"

mysql -e "GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' \

IDENTIFIED BY 'PLACEMENT_DBPASS';"

6.2 获取管理员凭据以获取对仅管理员CLI命令的访问权限

source /opt/admin-openrc

6.3 创建计算服务凭据

6.3.1 创建 nova 用户

密码设置为

NOVA_PASS

交互式与非交互式设置密码选择其中一种

非交互式设置密码

$ openstack user create --domain default \

--password NOVA_PASS nova

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | ebe9b1934a2e4c8ca9c177af647851b1 |

| name | nova |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

交互式设置密码

openstack user create --domain default --password-prompt nova

6.3.2 将 admin 角色添加到 nova 用户

openstack role add --project service --user nova admin

6.3.3 创建 nova 服务实体

$ openstack service create --name nova \

--description "OpenStack Compute" compute

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Compute |

| enabled | True |

| id | 412f485718f44759b6c3cd46b1d624e6 |

| name | nova |

| type | compute |

+-------------+----------------------------------+

6.3.4 创建 Compute API 服务端点

$ openstack endpoint create --region RegionOne \

compute public http://openstack-controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | cc0a7c21acd0450998760841dd9a11c0 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 412f485718f44759b6c3cd46b1d624e6 |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

$ openstack endpoint create --region RegionOne \

compute internal http://openstack-controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 69acbdd4f0114a339f8b62d9118ce137 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 412f485718f44759b6c3cd46b1d624e6 |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

$ openstack endpoint create --region RegionOne \

compute admin http://openstack-controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 5382d617406a4dba8280dc375dd53329 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 412f485718f44759b6c3cd46b1d624e6 |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

6.3.5 创建展示位置服务用户 PLACEMENT

密码设置为PLACEMENT_PASS

交互式与非交互式设置密码选择其中一种

非交互式创建密码

$ openstack user create --domain default --password PLACEMENT_PASS placement

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 5ab24083149e4adf978c43439b87c982 |

| name | placement |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

交互式创建密码

openstack user create --domain default --password-prompt placement

6.3.6 使用管理员角色将 Placement 用户添加到服务项目中

openstack role add --project service --user placement admin

6.3.7 在服务目录中创建 Placement API 条目

$ openstack service create --name placement \

--description "Placement API" placement

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Placement API |

| enabled | True |

| id | 274104c9a16f4b728bd7f484d3c54d3e |

| name | placement |

| type | placement |

+-------------+----------------------------------+

6.3.8 创建 Placement API 服务端点

$ openstack endpoint create --region RegionOne \

placement public http://openstack-controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | c3afd275f71a4406a701d16ad24aa325 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 274104c9a16f4b728bd7f484d3c54d3e |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

$ openstack endpoint create --region RegionOne \

placement internal http://openstack-controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 2ddc86a3b46d45489ebbedbd54fc3c0c |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 274104c9a16f4b728bd7f484d3c54d3e |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

$ openstack endpoint create --region RegionOne \

placement admin http://openstack-controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | d0714a417aa44c0180d59be843e1d40d |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 274104c9a16f4b728bd7f484d3c54d3e |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

6.4 安装和配置组件

6.4.1 安装软件包

yum -y install openstack-nova-api openstack-nova-conductor \

openstack-nova-console openstack-nova-novncproxy \

openstack-nova-scheduler openstack-nova-placement-api

6.4.2 编辑 /etc/nova/nova.conf 文件并完成以下操作

1.在此[DEFAULT]部分中,仅启用计算和元数据API

[DEFAULT]

# ...

enabled_apis = osapi_compute,metadata

2.在[api_database],[database]和[placement_database] 部分,配置数据库访问

[api_database]

# ...

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

[database]

# ...

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova

[placement_database]

# ...

connection = mysql+pymysql://placement:PLACEMENT_DBPASS@controller/placement

3.在该[DEFAULT]部分中,配置RabbitMQ消息队列访问

[DEFAULT]

# ...

transport_url = rabbit://openstack:RABBIT_PASS@controller

4.在[api]和[keystone_authtoken]部分中,配置身份服务访问

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

5.在该[DEFAULT]部分中,配置my_ip选项以使用控制器节点的管理接口IP地址

[DEFAULT]

# ...

my_ip = 10.0.0.11

6.在本[DEFAULT]节中,启用对网络服务的支持

[DEFAULT]

# ...

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

默认情况下,Compute使用内部防火墙驱动程序。由于网络服务包含防火墙驱动程序,因此必须使用nova.virt.firewall.NoopFirewallDriver防火墙驱动程序禁用计算防火墙驱动 程序

7.在该[vnc]部分中,将VNC代理配置为使用控制器节点的管理接口IP地址

[vnc]

enabled = true

# ...

server_listen = $my_ip

server_proxyclient_address = $my_ip

8.在该[glance]部分中,配置图像服务API的位置

[glance]

# ...

api_servers = http://controller:9292

9.在该[oslo_concurrency]部分中,配置锁定路径

[oslo_concurrency]

# ...

lock_path = /var/lib/nova/tmp

10.在该[placement]部分中,配置Placement API

[placement]

# ...

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = PLACEMENT_PASS

用以下命令修改

\cp /etc/nova/nova.conf{,.bak}

grep '^[a-Z\[]' /etc/nova/nova.conf.bak >/etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@openstack-controller

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 172.30.100.4

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf api_database connection mysql+pymysql://nova:NOVA_DBPASS@openstack-controller/nova_api

openstack-config --set /etc/nova/nova.conf database connection mysql+pymysql://nova:NOVA_DBPASS@openstack-controller/nova

openstack-config --set /etc/nova/nova.conf placement_database connection mysql+pymysql://placement:PLACEMENT_DBPASS@openstack-controller/placement

openstack-config --set /etc/nova/nova.conf api auth_strategy keystone

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://openstack-controller:5000/v3

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers openstack-controller:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

openstack-config --set /etc/nova/nova.conf vnc enabled true

openstack-config --set /etc/nova/nova.conf vnc server_listen '$my_ip'

openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address '$my_ip'

openstack-config --set /etc/nova/nova.conf glance api_servers http://openstack-controller:9292

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf placement region_name RegionOne

openstack-config --set /etc/nova/nova.conf placement project_domain_name Default

openstack-config --set /etc/nova/nova.conf placement project_name service

openstack-config --set /etc/nova/nova.conf placement auth_type password

openstack-config --set /etc/nova/nova.conf placement user_domain_name Default

openstack-config --set /etc/nova/nova.conf placement auth_url http://openstack-controller:5000/v3

openstack-config --set /etc/nova/nova.conf placement username placement

openstack-config --set /etc/nova/nova.conf placement password PLACEMENT_PASS

文件md5值

$ md5sum /etc/nova/nova.conf

44436fe1f334fdfdf0b5efdbf4250e94 /etc/nova/nova.conf

6.4.3 由于包装错误,您必须通过将以下配置添加到来启用对Placement API的访问 /etc/httpd/conf.d/00-nova-placement-api.conf

6.4.3.1 备份文件并追加内容

追加内容时要添加一行空行,否则格式会有错误(这里添加了两行空行,其中第一行是为了格式正确,第二行是为了格式规范,即标签与标签之间有一行空行)

\cp /etc/httpd/conf.d/00-nova-placement-api.conf{,.bak}

cat >> /etc/httpd/conf.d/00-nova-placement-api.conf <<EOF

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

EOF

文件md5值

$ md5sum /etc/httpd/conf.d/00-nova-placement-api.conf

4b31341049e863449951b0c76fe17bde /etc/httpd/conf.d/00-nova-placement-api.conf

6.4.3.2 重启httpd

systemctl restart httpd

6.4.3 同步数据库,忽略输出

6.4.3.1 同步数据库

# 同步nova-api和placement数据库

$ su -s /bin/sh -c "nova-manage api_db sync" nova

# 注册cell0数据库

$ su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

# 创建cell1单元格

$ su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

536383cb-03e4-48bb-bb77-4eeb1bfb9d80

# 同步nova数据库

$ su -s /bin/sh -c "nova-manage db sync" nova

/usr/lib/python2.7/site-packages/pymysql/cursors.py:170: Warning: (1831, u'Duplicate index `block_device_mapping_instance_uuid_virtual_name_device_name_idx`. This is deprecated and will be disallowed in a future release.')

result = self._query(query)

/usr/lib/python2.7/site-packages/pymysql/cursors.py:170: Warning: (1831, u'Duplicate index `uniq_instances0uuid`. This is deprecated and will be disallowed in a future release.')

result = self._query(query)

6.4.3.2 验证 nova cell0 和 cell1 是否正确注册

$ su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+----------+

| Name | UUID | Transport URL | Database Connection | Disabled |

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@controller/nova_cell0 | False |

| cell1 | 536383cb-03e4-48bb-bb77-4eeb1bfb9d80 | rabbit://openstack:****@controller | mysql+pymysql://nova:****@controller/nova | False |

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+----------+

6.4.4 启动Compute服务并将其配置为在系统引导时启动

nova-consoleauth自18.0.0(Rocky)起不推荐使用,并将在以后的版本中删除。每个单元应部署控制台代理。如果执行全新安装(而非升级),则可能不需要安装nova-consoleauth服务。有关workarounds.enable_consoleauth详细信息,请参见 。

systemctl enable openstack-nova-api.service \

openstack-nova-consoleauth openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

systemctl start openstack-nova-api.service \

openstack-nova-consoleauth openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

安装完成后会有no VNC 172.30.100.4:6080

安装和配置计算节点

6.5 安装和配置组件

6.5.1 安装软件包

yum -y install openstack-nova-compute openstack-utils

6.5.2 编辑/etc/nova/nova.conf文件并完成以下操作

1.在此[DEFAULT]部分中,仅启用计算和元数据API

[DEFAULT]

# ...

enabled_apis = osapi_compute,metadata

2.在该[DEFAULT]部分中,配置RabbitMQ消息队列访问:

[DEFAULT]

# ...

transport_url = rabbit://openstack:RABBIT_PASS@controller

3.在[api]和[keystone_authtoken]部分中,配置身份服务访问:

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

4.在该[DEFAULT]部分中,配置my_ip选项:MANAGEMENT_INTERFACE_IP_ADDRESS为计算节点上管理网络接口的IP地址 my_ip = MANAGEMENT_INTERFACE_IP_ADDRESS

[DEFAULT]

# ...

my_ip = 10.0.0.31

5.在本[DEFAULT]节中,启用对网络服务的支持:

[DEFAULT]

# ...

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

6.在本[DEFAULT]节中,启用对网络服务的支持:

[DEFAULT]

# ...

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

7.在该[vnc]部分中,启用和配置远程控制台访问:

[vnc]

# ...

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

8.在该[glance]部分中,配置图像服务API的位置:

[glance]

# ...

api_servers = http://controller:9292

9.在该[oslo_concurrency]部分中,配置锁定路径:

[oslo_concurrency]

# ...

lock_path = /var/lib/nova/tmp

10.在该[placement]部分中,配置Placement API:

[placement]

# ...

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = PLACEMENT_PASS

用以下命令修改

\cp /etc/nova/nova.conf{,.bak}

grep '^[a-Z\[]' /etc/nova/nova.conf.bak >/etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@controller

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 172.30.100.5

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf api auth_strategy keystone

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://controller:5000/v3

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

openstack-config --set /etc/nova/nova.conf vnc enabled true

openstack-config --set /etc/nova/nova.conf vnc server_listen 0.0.0.0

openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address '$my_ip'

openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http://controller:6080/vnc_auto.html

openstack-config --set /etc/nova/nova.conf glance api_servers http://controller:9292

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf placement region_name RegionOne

openstack-config --set /etc/nova/nova.conf placement project_domain_name Default

openstack-config --set /etc/nova/nova.conf placement project_name service

openstack-config --set /etc/nova/nova.conf placement auth_type password

openstack-config --set /etc/nova/nova.conf placement user_domain_name Default

openstack-config --set /etc/nova/nova.conf placement auth_url http://controller:5000/v3

openstack-config --set /etc/nova/nova.conf placement username placement

openstack-config --set /etc/nova/nova.conf placement password PLACEMENT_PASS

文件md5值

$ md5sum /etc/nova/nova.conf

fa0ddace12aaa14c6bcfe86b70efac24 /etc/nova/nova.conf

6.5.3 确定您的计算节点是否支持虚拟机的硬件加速

$ egrep -c '(vmx|svm)' /proc/cpuinfo

2

如果此命令返回值1或更大,则计算节点支持硬件加速,通常不需要其他配置。 如果此命令返回值为0,则计算节点不支持硬件加速,您必须将libvirt配置为使用QEMU而不是KVM。

openstack-config --set /etc/nova/nova.conf libvirt virt_type qemu

6.5.4 启动Compute服务及其依赖项,并将它们配置为在系统引导时自动启动

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl start libvirtd.service openstack-nova-compute.service

验证操作,在控制节点执行

6.6 验证Compute服务的运行

6.6.1 获取管理员凭据以获取对仅管理员CLI命令的访问权限

source /opt/admin-openrc

6.6.2 列出服务组件以验证每个进程的成功启动和注册

$ openstack compute service list --service nova-compute

+----+--------------+---------------------+------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+--------------+---------------------+------+---------+-------+----------------------------+

| 6 | nova-compute | openstack-compute01 | nova | enabled | up | 2021-11-04T07:29:47.000000 |

+----+--------------+---------------------+------+---------+-------+----------------------------+

6.6.3 发现计算主机

$ su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': 536383cb-03e4-48bb-bb77-4eeb1bfb9d80

Checking host mapping for compute host 'compute1': 83452da0-a693-4860-bcd8-028743169f0f

Creating host mapping for compute host 'compute1': 83452da0-a693-4860-bcd8-028743169f0f

Found 1 unmapped computes in cell: 536383cb-03e4-48bb-bb77-4eeb1bfb9d80

到此,控制节点和计算节点计算服务nova安装完成!!!

7.控制节点、计算节点网络服务neutron安装

neutron相关服务

| 服务名 | 说明 |

|---|---|

| neutron-server | 端口(9696) api 接受和响应外部的网络管理请求 |

| neutron-linuxbridge-agent | 负责创建桥接网卡 |

| neutron-dhcp-agent | 负责分配IP |

| neutron-metadata-agent | 配合nova-metadata-api实现虚拟机的定制化操作 |

| L3-agent | 实现三层网络(网络层) |

安装和配置控制节点

7.1 创建neutron数据库并授权

mysql -e "CREATE DATABASE neutron;"

mysql -e "GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' \

IDENTIFIED BY 'NEUTRON_DBPASS';"

mysql -e "GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' \

IDENTIFIED BY 'NEUTRON_DBPASS';"

7.2 获取管理员凭据以获取对仅管理员CLI命令的访问权限

source /opt/admin-openrc

7.3 创建服务凭证

7.3.1 创建neutron用户

密码设置为

NEUTRON_PASS

交互式与非交互式设置密码选择其中一种

非交互式创建密码

$ openstack user create --domain default --password NEUTRON_PASS neutron

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 014a7629fb0548899be31c87494e1156 |

| name | neutron |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

交互式创建密码

openstack user create --domain default --password-prompt neutron

7.3.2 将 admin 角色添加到 neutron用户

openstack role add --project service --user neutron admin

7.3.3 创建 neutron 服务实体

$ openstack service create --name neutron \

--description "OpenStack Networking" network

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Networking |

| enabled | True |

| id | 9e74ccbdaa85421894cf61c97f355dc7 |

| name | neutron |

| type | network |

+-------------+----------------------------------+

7.4 创建网络服务API端点

$ openstack endpoint create --region RegionOne \

network public http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | abe8c37741934ade89308da46501ea03 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 9e74ccbdaa85421894cf61c97f355dc7 |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

$ openstack endpoint create --region RegionOne \

network internal http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 0c32f6cb44a74ec5b653ba79153e3d68 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 9e74ccbdaa85421894cf61c97f355dc7 |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

$ openstack endpoint create --region RegionOne \

network admin http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | d2c77ff079c94591bc8ea0b4e51be936 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 9e74ccbdaa85421894cf61c97f355dc7 |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

安装和配置控制节点

7.5 配置网络选项

您可以使用选项1和2表示的两种体系结构之一来部署网络服务。

选项1部署了最简单的架构,该架构仅支持将实例附加到提供程序(外部)网络。没有自助服务(专用)网络,路由器或浮动IP地址。只有

admin或其他特权用户可以管理提供商网络。选项2通过支持将实例附加到自助服务网络的第3层服务增强了选项1。该

demo非特权用户或其他非特权用户可以管理自助服务网络,包括在自助服务网络与提供商网络之间提供连接的路由器。此外,浮动IP地址使用自助服务网络从外部网络(例如Internet)提供到实例的连接。自助服务网络通常使用覆盖网络。诸如VXLAN之类的覆盖网络协议包括其他标头,这些标头增加了开销并减少了可用于有效负载或用户数据的空间。在不了解虚拟网络基础结构的情况下,实例尝试使用默认的1500字节以太网最大传输单元(MTU)发送数据包。网络服务会通过DHCP自动为实例提供正确的MTU值。但是,某些云映像不使用DHCP或忽略DHCP MTU选项,而是需要使用元数据或脚本进行配置。

这里选择网路选项1

7.5.1 安装软件包

yum -y install openstack-neutron openstack-neutron-ml2 \

openstack-neutron-linuxbridge ebtables

7.5.2 编辑 /etc/neutron/neutron.conf 文件并完成以下操作

1.在该[database]部分中,配置数据库访问

[database]

# ...

connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

2.在该[DEFAULT]部分中,启用模块化第2层(ML2)插件并禁用其他插件:

[DEFAULT]

# ...

core_plugin = ml2

service_plugins =

3.在该[DEFAULT]部分中,配置RabbitMQ 消息队列访问:

[DEFAULT]

# ...

transport_url = rabbit://openstack:RABBIT_PASS@controller

4.在[DEFAULT]和[keystone_authtoken]部分中,配置身份服务访问

[DEFAULT]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASS

5.在[DEFAULT]和[nova]部分中,将网络配置为通知Compute网络拓扑更改

[DEFAULT]

# ...

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[nova]

# ...

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = NOVA_PASS

6.在该[oslo_concurrency]部分中,配置锁定路径

[oslo_concurrency]

# ...

lock_path = /var/lib/neutron/tmp

用以下命令修改

\cp /etc/neutron/neutron.conf{,.bak}

grep '^[a-Z\[]' /etc/neutron/neutron.conf.bak >/etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf database connection mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

openstack-config --set /etc/neutron/neutron.conf DEFAULT core_plugin ml2

openstack-config --set /etc/neutron/neutron.conf DEFAULT service_plugins

openstack-config --set /etc/neutron/neutron.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@controller

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_status_changes true

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_data_changes true

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken www_authenticate_uri http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken www_authenticate_uri http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password NEUTRON_PASS

openstack-config --set /etc/neutron/neutron.conf nova auth_url http://controller:5000

openstack-config --set /etc/neutron/neutron.conf nova auth_type password

openstack-config --set /etc/neutron/neutron.conf nova project_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova user_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova region_name RegionOne

openstack-config --set /etc/neutron/neutron.conf nova project_name service

openstack-config --set /etc/neutron/neutron.conf nova username nova

openstack-config --set /etc/neutron/neutron.conf nova password NOVA_PASS

openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

文件md5值

$ md5sum /etc/neutron/neutron.conf

1c4b4339f83596fa6bfdbec7a622a35e /etc/neutron/neutron.conf

7.6 编辑 /etc/neutron/plugins/ml2/ml2_conf.ini 文件并完成以下操作

ML2插件使用Linux桥接机制为实例构建第2层(桥接和交换)虚拟网络基础架构

1.在本[ml2]节中,启用平面和VLAN网络

[ml2]

# ...

type_drivers = flat,vlan

2.在该[ml2]部分中,禁用自助服务网络:

[ml2]

# ...

tenant_network_types =

3.在本[ml2]节中,启用Linux桥接机制:

[ml2]

# ...

mechanism_drivers = linuxbridge

4.在此[ml2]部分中,启用端口安全扩展驱动程序:

[ml2]

# ...

extension_drivers = port_security

5.在本[ml2_type_flat]节中,将提供者虚拟网络配置为平面网络:

[ml2_type_flat]

# ...

flat_networks = provider

6.在本[securitygroup]节中,启用ipset以提高安全组规则的效率:

[securitygroup]

# ...

enable_ipset = true

用以下命令修改

\cp /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}

grep '^[a-Z\[]' /etc/neutron/plugins/ml2/ml2_conf.ini.bak >/etc/neutron/plugins/ml2/ml2_conf.ini

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 type_drivers flat,vlan

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 tenant_network_types

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 mechanism_drivers linuxbridge

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 extension_drivers port_security

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_flat flat_networks provider

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup enable_ipset true

文件md5值

$ md5sum /etc/neutron/plugins/ml2/ml2_conf.ini

eb38c10cfd26c1cc308a050c9a5d8aa1 /etc/neutron/plugins/ml2/ml2_conf.ini

7.7 配置linux桥接代理

7.7.1 编辑/etc/neutron/plugins/ml2/linuxbridge_agent.ini文件并完成以下操作

Linux网桥代理为实例构建第2层(桥接和交换)虚拟网络基础架构并处理安全组

1.在本[linux_bridge]节中,将提供者虚拟网络映射到提供者物理网络接口:

[linux_bridge]

physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME

替换PROVIDER_INTERFACE_NAME为基础提供商物理网络接口的名称。这里是eth0

2.在该[vxlan]部分中,禁用VXLAN覆盖网络:

[vxlan]

enable_vxlan = false

3.在该[securitygroup]部分中,启用安全组并配置Linux网桥iptables防火墙驱动程序:

[securitygroup]

# ...

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

通过验证以下所有sysctl值是否设置为确保Linux操作系统内核支持网桥过滤器1:

net.bridge.bridge-nf-call-iptables

net.bridge.bridge-nf-call-ip6tables

要启用网络桥接器支持,通常br_netfilter需要加载内核模块。查看操作系统的文档,以获取有关启用此模块的其他详细信息。

用以下命令修改

\cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

grep '^[a-Z\[]' /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak >/etc/neutron/plugins/ml2/linuxbridge_agent.ini

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:eth0

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan false

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

文件md5值

$ md5sum /etc/neutron/plugins/ml2/linuxbridge_agent.ini

794b19995c83e2fc0c3fd42f506904f1 /etc/neutron/plugins/ml2/linuxbridge_agent.ini

7.7.2 使Linux操作系统内核支持网桥过滤器1

向 /etc/sysctl.d/openstack-rocky-bridge.conf 写入以下内容

cat >> /etc/sysctl.d/openstack-rocky-bridge.conf <<EOF

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

执行以下命令生效

$ modprobe br_netfilter && sysctl -p /etc/sysctl.d/openstack-rocky-bridge.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

7.8 配置DHCP代理

DHCP代理为虚拟网�络提供DHCP服务

编辑 /etc/neutron/dhcp_agent.ini 文件并完成以下操作

在本[DEFAULT]节中,配置Linux桥接口驱动程序Dnsmasq DHCP驱动程序,并启用隔离的元数据,以便提供商网络上的实例可以通过网络访问元数据

[DEFAULT]

# ...

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

用以下命令修改

\cp /etc/neutron/dhcp_agent.ini{,.bak}

grep '^[a-Z\[]' /etc/neutron/dhcp_agent.ini.bak >/etc/neutron/dhcp_agent.ini

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT interface_driver linuxbridge

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT dhcp_driver neutron.agent.linux.dhcp.Dnsmasq

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT enable_isolated_metadata true

文件md5值

$ md5sum /etc/neutron/dhcp_agent.ini

33a1e93e1853796070d5da0773496665 /etc/neutron/dhcp_agent.ini

7.9 配置元数据代理

所述元数据代理提供配置信息的诸如凭据实例。

编辑 /etc/neutron/metadata_agent.ini 文件并完成以下操作

在该[DEFAULT]部分中,配置元数据主机和共享机密:

[DEFAULT]

# ...

nova_metadata_host = controller

metadata_proxy_shared_secret = METADATA_SECRET

替换METADATA_SECRET为元数据代理的适当机密

用以下命令修改

\cp /etc/neutron/metadata_agent.ini{,.bak}

grep '^[a-Z\[]' /etc/neutron/metadata_agent.ini.bak >/etc/neutron/metadata_agent.ini

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT nova_metadata_host controller

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT metadata_proxy_shared_secret METADATA_SECRET

文件md5值

$ md5sum /etc/neutron/metadata_agent.ini

e8b90a011b94fece31d33edfd8bc72b6 /etc/neutron/metadata_agent.ini

7.10 配置计算以使用网络

编辑 /etc/nova/nova.conf 文件并执行以下操作

在该[neutron]部分中,配置访问参数,启用元数据代理,并配置机密:

[neutron]

# ...

url = http://controller:9696

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

service_metadata_proxy = true

metadata_proxy_shared_secret = METADATA_SECRET

用以下命令修改

openstack-config --set /etc/nova/nova.conf neutron url http://controller:9696

openstack-config --set /etc/nova/nova.conf neutron auth_url http://controller:5000

openstack-config --set /etc/nova/nova.conf neutron auth_type password

openstack-config --set /etc/nova/nova.conf neutron project_domain_name default

openstack-config --set /etc/nova/nova.conf neutron user_domain_name default

openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne

openstack-config --set /etc/nova/nova.conf neutron project_name service

openstack-config --set /etc/nova/nova.conf neutron username neutron

openstack-config --set /etc/nova/nova.conf neutron password NEUTRON_PASS

openstack-config --set /etc/nova/nova.conf neutron service_metadata_proxy true

openstack-config --set /etc/nova/nova.conf neutron metadata_proxy_shared_secret METADATA_SECRET

文件md5值

$ md5sum /etc/nova/nova.conf

81feca9d18ee91397cc973d455bfa271 /etc/nova/nova.conf

7.11 完成安装

7.11.1 创建链接文件

网络服务初始化脚本需要

/etc/neutron/plugin.ini指向ML2插件配置文件的符号链接/etc/neutron/plugins/ml2/ml2_conf.ini。如果此符号链接不存在,请使用以下命令创建它

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

7.11.2 同步数据库,最后提示OK即为正确

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

7.11.3 重新启动Compute API服务

systemctl restart openstack-nova-api.service

7.11.4 启动网络服务并将其配置为在系统引导时启动

对于官网中的两种网络,这里选择的是第一种网络

systemctl enable neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

systemctl start neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

7.11.5 验证

# 启动服务后提示如下即为正确,alive处都为笑脸

$ neutron agent-list

neutron CLI is deprecated and will be removed in the future. Use openstack CLI instead.

+--------------------------------------+--------------------+------------+-------------------+-------+----------------+---------------------------+

| id | agent_type | host | availability_zone | alive | admin_state_up | binary |

+--------------------------------------+--------------------+------------+-------------------+-------+----------------+---------------------------+

| beffcac6-745e-449f-bad8-7f2e4fa973f2 | Linux bridge agent | controller | | :-) | True | neutron-linuxbridge-agent |

+--------------------------------------+--------------------+------------+-------------------+-------+----------------+---------------------------+

安装和配置计算节点

7.12 安装包

yum -y install openstack-neutron-linuxbridge ebtables ipset openstack-utils

7.13 配置公共组件

编辑 /etc/neutron/neutron.conf 文件并完成以下操作

1.在该[DEFAULT]部分中,配置RabbitMQ 消息队列访问:

[DEFAULT]

# ...

transport_url = rabbit://openstack:RABBIT_PASS@controller

2.在[DEFAULT]和[keystone_authtoken]部分中,配置身份服务访问:

[DEFAULT]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASS

3.在该[oslo_concurrency]部分中,配置锁定路径:

[oslo_concurrency]

# ...

lock_path = /var/lib/neutron/tmp

#用以下命令修改

\cp /etc/neutron/neutron.conf{,.bak}

grep '^[a-Z\[]' /etc/neutron/neutron.conf.bak >/etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@controller

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken www_authenticate_uri http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password NEUTRON_PASS

openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

用以下命令修改

\cp /etc/neutron/neutron.conf{,.bak}

grep '^[a-Z\[]' /etc/neutron/neutron.conf.bak >/etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@controller

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken www_authenticate_uri http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password NEUTRON_PASS

openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

文件md5值

$ md5sum /etc/neutron/neutron.conf

9c47ffb59b23516b59e7de84a39bcbe8 /etc/neutron/neutron.conf

7.14 配置网络选项

7.14.1 配置桥接代理

Linux网桥代理为实例构建第2层(桥接和交换)虚拟网络基础结构并处理安全组

7.14.1.1 编辑 /etc/neutron/plugins/ml2/linuxbridge_agent.ini 文件并完成以下操作

1.在本[linux_bridge]节中,将提供者虚拟网络映射到提供者物理网络接口:

[linux_bridge]

physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME

替换PROVIDER_INTERFACE_NAME为基础提供商物理网络接口的名称。这里为eth0

2.在该[vxlan]部分中,禁用VXLAN覆盖网络:

[vxlan]

enable_vxlan = false

3.在该[securitygroup]部分中,启用安全组并配置Linux网桥iptables防火墙驱动程序:

[securitygroup]

# ...

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

用以下命令修改

\cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

grep '^[a-Z\[]' /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak >/etc/neutron/plugins/ml2/linuxbridge_agent.ini

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:eth0

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan false

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group true